Deepseek

What is DeepSeek?

DeepSeek is an advanced and revolutionary open-source AI model developed by DeepSeek AI. The Chinese leading model is designed specifically for coding and mathematical reasoning tasks. It uses a Mixture-of-Experts (MoE) architecture to activate only a portion of its 236 billion parameters per input, making it both efficient and powerful.

Additionally, it supports over 338 programming languages and gives a massive 128K token context window, enabling it to handle complex coding environments and long documents. With benchmark results outperforming models like GPT-4 Turbo in HumanEval and MATH, the platform is ideal for developers, researchers, and enterprises looking for high-performance, scalable, and open-source AI coding solutions.

DeepSeek Summarized Review | |

Performance Rating | A |

AI Category | AI Assistant, Code Generation, Mathematical Reasoning |

AI Capabilities | MoE architecture, Natural Language Processing |

Pricing Model | Open-source, free for commercial use |

Compatibility | Web-based interface, API access |

Accuracy | 4.7 |

Key Features

The key features include:

- Extensive Language Support

- High Context Window

- Efficient Architecture

- Benchmark Performance

- Open-Source Accessibility

Who Should Use?

- Developers & Engineers: Suitable for those seeking advanced code generation and debugging assistance across multiple programming languages.

- Enterprises & Startups: Ideal for organizations aiming to integrate powerful AI coding tools without vendor lock-in.

- Educators & Researchers: Beneficial for academic institutions focusing on AI, coding, and mathematical problem-solving.

- AI Enthusiasts & Contributors: Open-source nature allows for experimentation, customization, and contribution to model improvements.

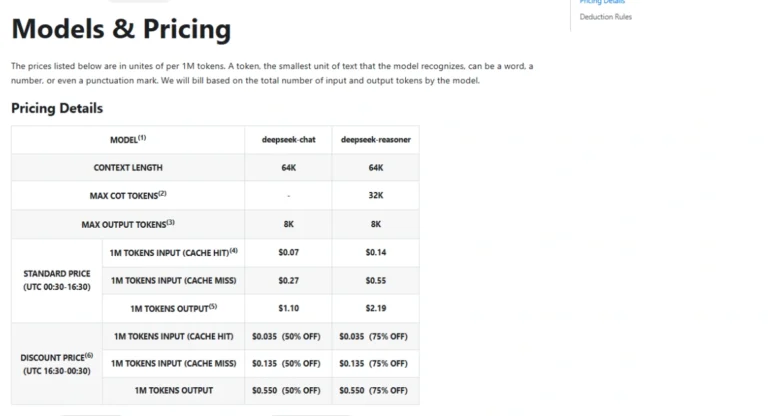

Pricing & Plans

DeepSeek is open-source and available for free for both commercial and research purposes. For more information, you can check the DeepSeek official website.

Pros & Cons

Pros

- It outperforms leading models in coding and math tasks.

- Free for commercial use, fostering innovation and collaboration.

- MoE architecture ensures resource optimization.

- Accommodates a wide range of programming languages.

Cons

- Deployment and customization may require advanced knowledge.

- Running the model locally demands significant computational power.

- Primarily optimized for English and Chinese, which may not meet all global needs

Final Verdict

DeepSeek-Coder V2 stands out as a powerful, open-source alternative to proprietary AI models and has been proven to be a breakthrough innovation. It offers exceptional performance in code generation and mathematical reasoning.

Moreover, its extensive language support, efficient architecture, and open accessibility make it a valuable tool for developers, enterprises, and researchers seeking advanced AI capabilities without the constraints of closed-source platforms.

FAQs

Can I run DeepSeek-Coder V2 locally?

- Yes! You can, but it requires substantial computational resources due to its model size.

How does it compare to GPT-4 Turbo?

- According to its Benchmark evaluations, DeepSeek-Coder V2 outperforms GPT-4 Turbo in coding and math tasks.

Is DeepSeek-Coder V2 free to use?

- Absolutely! It’s open-source and free for both commercial and research purposes.